What is the impact of weight initialization on neural networks?

When using an artificial neural network, it may seem that the values of the weights initialized at each layer don't really matter since these values will eventually be adjusted during the backpropagation step. However, in most cases, the initialization of weights can significantly impact the training time and the performance of the model.

In this answer, we will explore some techniques commonly used to initialize weights in artificial neural networks and how these initialized values can negatively impact the training process.

What are some common strategies to initialize weights?

To illustrate the different techniques of weight initialization, we will be using the following neural network with 2 input features: a single hidden layer with 2 nodes and a single output node. The activation functions we'll consider are the 3 most common ones:

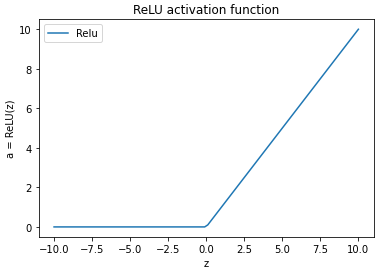

ReLU

tanh

Sigmoid

Convention:

is the weight of a neuron, is its bias, and is its activation. is the layer in which the neuron with weight lies, and is the neuron in layer whose output is being used as a weighted input to this neuron.

1. Initializing all weights to 0

This naive strategy is widely used by many beginners. However, the wrong approach to follow.

Using the ReLU activation function

When a ReLU activation function is used, the activation

So, in our example:

However, since all the weights are 0 (including the bias), both

Now, if the activations are 0, then during backpropagation, when we update the weights via the following weight update rule:

Using the tanh activation function

When a tanh acivation function is used, the activation

As was the case with ReLU, when the weights are updated during backpropagation, they never change and remain as 0.

Using the Sigmoid activation function

When a sigmoid activation function is used, the activation

Here again,

Now, all the neurons in a given layer will have the same non-zero activation value (0.5 in this example). This means that

To understand what happens when we update the weights during backpropagation, we first need to look at how

So, since

What this means is that when the weights are updated by the weight update rule

This implies two things:

Since the activations of all the neurons in the hidden layer are the same, the entire hidden layer acts as a single neuron.

Since all the weights applied to the output of a single neuron remain the same during training, effectively, each neuron has just a single weight coming into it. This means that this model is now linear (and this defeats the purpose of even using a neural network).

2. Initializing all weights to a constant, non-zero value

Whether we use a ReLU, tanh, or Sigmoid activation function, the effect is the same when the weights are initialized to zero with the sigmoid function.

Earlier in our example neural network we saw that:

Now, if

3. Initializing weights randomly from a normal distribution

We have seen that choosing a constant value for all the neurons is an incorrect way to initialize the weights. To overcome this, we can choose weights randomly from a normal distribution instead. Each weight is chosen randomly from a normal distribution with a mean of 0 and a variance of 1. While this would solve the problems explained above, this approach also has its caveats.

To see how this can hinder the training, let's consider the following example where all the inputs

The weighted sum

Now, recall that the mean of the sum of random variables sampled from a normal distribution is the mean of the distribution itself. Hence, the value of

With the variance of

In this case, the gradient descent causes very small updates in the value of the weights (barely moving it in the right direction) and thus the network’s ability to learn is hindered and the training time increases drastically.

To overcome the shortcomings of these methods, in 2010, Xavier introduced the Xavier initialization technique for initializing weights.

Free Resources